ELU¶

-

class

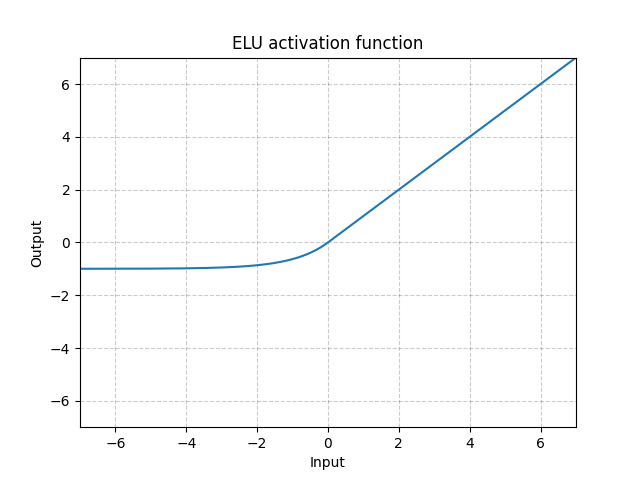

torch.nn.ELU(alpha=1.0, inplace=False)[source]¶ Applies the Exponential Linear Unit (ELU) function, element-wise, as described in the paper: Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs).

ELU is defined as:

- Parameters

alpha – the value for the ELU formulation. Default: 1.0

inplace – can optionally do the operation in-place. Default:

False

- Shape:

Input: , where means any number of dimensions.

Output: , same shape as the input.

Examples:

>>> m = nn.ELU() >>> input = torch.randn(2) >>> output = m(input)